Azure DevOps has the capability of associating

Test Cases with automated test methods, when using supported test frameworks.

The list of supported frameworks can be found here.

However, if you are writing tests using

Selenium Java or any of the frameworks that are not supported - but still want

to track your results against a Test Suite in ADO, we need a solution that can

update the Test Cases / Test Points in ADO based on the actual test results.

One approach to do this will be to use ADO REST

API’s to update the results back to ADO once the automated test is executed independently.

This document explains the steps to be performed in order to create a test run,

add test points and update the results using ADO’s REST APIs. A generic

solution will ideally have a configuration file or some mechanism which maps a

test method to a test point in ADO.

Background:

A test point is a unique combination of test

case, test suite, configuration, and tester. For example, if a test case exists

in two suites or for multiple configurations in the same suite, then they will

have different test points. More about test points can be found in this Microsoft page.

The below image represents a typical Test Plan

/ Test Suite / Test Case / Test Point combination, along with the Test run and

outcome:

Below are the steps to be performed:

- Identify the test points that needs

to be executed.

- Create a test run with the test

points.

- Update the outcome.

Below we have a Test plan – “DemoAPI” with a suite “APITest” having 2 test cases. The 2 test cases present inside the suites have 2 different test points.

Below we have a Test plan – “DemoAPI” with a suite “APITest” having 2 test cases. The 2 test cases present inside the suites have 2 different test points.

The test points for the test cases needs to be

determined using the API calls first:

Step 1:

Example:

Response:

Once we get the test points, we need

to generate a test runs by adding those points to start execution.

Step 2:

Example:

Body:

{

"name": "API Demo Run",

"plan": {

"id": "1"

},

"pointIds": [

1,

2

]

}

Response:

We will get a response with the Test Run Id (that just got created) and its details. Now, if we go to “Runs” in ADO we should be able to see that a new test run has been created with the Run Id in the response, 12 in this case and Run name “API Demo Run”.

If we select that test run and see the test results, then we can see the same 2 test points in unspecified states.

If we select that test run and see the test results,

then we can see the same 2 test points in unspecified states.

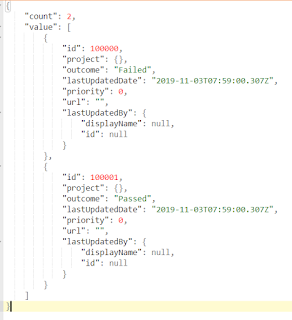

Now here, these test points for which

the execution has started will have corresponding result ids generated within the

Test Run, which starts with 100000 and increments by 1. The order of ids

generated depends on the test points order provided in the body of the POST. In

this example the order of points was 1 and 2, so the result ids will be 100000

and 100001 respectively.

Step

3:

Now we need post an outcome and state against

the result id’s (100000,100001) generated in Test Run using the API.

PATCH https://dev.azure.com/{organization}/{project}/_apis/test/Runs/{runId}/results?api-version=5.1

Example:

Body:

[

{

"id": 100000,

"state":

"Completed",

"outcome": "Failed"

},

{

"id": 100001,

"state":

"Completed",

"outcome": "Passed"

}

]

Response:

Refresh the test run page in ADO, and we can see that the state of the test run has been changed from “In Progress” to “Needs Investigation”:

In ADO, “Needs Investigation” appeared

because, there are failed test cases inside the test run.

To see the results, navigate inside the test run:

Now if we navigate to the Test plans page, we

can see that test execution result has been updated:

Common Pitfall

Creating too

many test runs. Do not follow a strategy that creates a test run per test point–

this would result in too many test runs being created. Instead, create a single

test run and add the points that you plan to execute, in it.

References:

1.

https://docs.microsoft.com/en-us/rest/api/azure/devops/test/points/list?view=azure-devops-rest-5.1

2.

https://docs.microsoft.com/en-us/rest/api/azure/devops/test/runs/create?view=azure-devops-rest-5.1

3.

https://docs.microsoft.com/en-us/rest/api/azure/devops/test/results/update?view=azure-devops-rest-5.1

Nice Work Arun Varriar! This something very useful.

ReplyDeleteWell Done!

Thanks!!

Delete